Q1.What is SSIS? How it is related with SQL Server.

SQL Server Integration Services (SSIS) is a component of SQL Server which can be used to perform a wide range of Data Migration and ETL operations. SSIS is a component in MSBI process of SQL Server.

This is a platform for Integration and Workflow applications. It is known for a fast and flexible OLTP and OLAP extensions used for data extraction, transformation, and loading (ETL). The tool may also be used to automate maintenance of SQL Server databases and multidimensional data sets.

Q2: What are the tools associated with SSIS?

We use Business Intelligence Development Studio (BIDS) OR SQL Server Development Tools (SSDT) and SQL Server Management Studio (SSMS) to work with Development of SSIS Projects.

We use SSMS to manage the SSIS Packages and Projects.

Q3: What is the difference between BIDS and SSDT?

Q4: What are the differences between DTS and SSIS?

Data Transformation Services

SQL Server Integration Services

Limited Error Handling

Complex and powerful Error Handling

Message Boxes in ActiveX Scripts

Message Boxes in .NET Scripting

No Deployment Wizard

Interactive Deployment Wizard

Limited Set of Transformation

Good number of Transformations

NO BI functionality

Complete BI Integration

Q5. Explain architecture of SSIS?

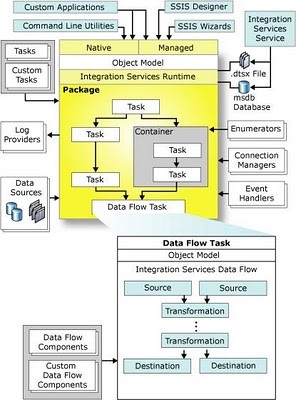

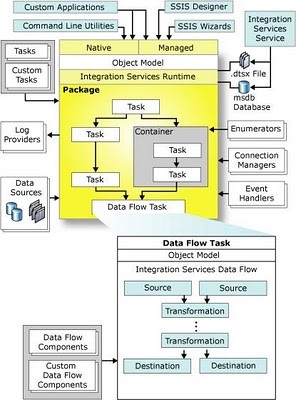

SSIS architecture consists of four key parts:

a) Integration Services service: monitors running Integration Services packages and manages the storage of packages.

b) Integration Services object model: includes managed API for accessing Integration Services tools, command-line utilities, and custom applications.

c) Integration Services runtime and run-time executables: it saves the layout of packages, runs packages, and provides support for logging, breakpoints, configuration, connections, and transactions. The Integration Services run-time executables are the package, containers, tasks, and event handlers that Integration Services includes, and custom tasks.

d) Data flow engine: provides the in-memory buffers that move data from source to destination.

Q6: What is a workflow in SSIS 2014?

Workflow is a set of instructions on to specify the Program Executor on how to execute tasks and containers within SSIS Packages.

Q7. What is an SSIS Package?

SSIS package is a collection of variety of tasks to perform the Extract, Transform and Load data. Though the primary functionality of SSIS packages is for ETL, they can be used for other maintenance tasks such as database backups, Index rebuild, delete old backups etc.

Q8. How do you create SSIS Packages? OR What tools do you use for creating SSIS Packages?

You can create SSIS packages using Business Intelligence Development Studio in short “BIDS”. It can be installed using the same SQL Server installation media that is used to Install SQL Server. If all the features are selected when choosing the features at the time of installation, then BIDS is installed along with Management Studio, Books Online and other tools..

Q9: What is the control flow?

A control flow consists of one or more tasks and containers that execute when the package runs. To control order or define the conditions for running the next task or container in the package control flow, we use precedence constraints to connect the tasks and containers in a package. A subset of tasks and containers can also be grouped and run repeatedly as a unit within the package control flow. SQL Server Integration Services (SSIS) provides three different types of control flow elements: Containers that provide structures in packages, Tasks that provide functionality, and Precedence Constraints that connect the executables, containers, and tasks into an ordered control flow.

Q10: What is the data flow?

Data flow consists of the sources and destinations that extract and load data, the transformations that modify and extend data, and the paths that link sources, transformations, and destinations The Data Flow task is the executable within the SSIS package that creates, orders, and runs the data flow. A separate instance of the data flow engine is opened for each Data Flow task in a package. Data Sources, Transformations, and Data Destinations are the three important categories in the Data Flow.

Q11. What is the Data Flow Engine?

The Data Flow Engine, also called the SSIS pipeline engine, is responsible for managing the flow of data from the source to the destination and performing transformations (lookups, data cleansing etc.). Data flow uses memory oriented architecture, called buffers, during the data flow and transformations which allows it to execute extremely fast. This means the SSIS pipeline engine pulls data from the source, stores it in buffers (in-memory), does the requested transformations in the buffers and writes to the destination. The benefit is that it provides the fastest transformation as it happens in memory and we don't need to stage the data for transformations in most cases.

Q12. What is a Transformation?

A transformation simply means bringing in the data in a desired format. For example you are pulling data from the source and want to ensure only distinct records are written to the destination, so duplicates are removed. Anther example is if you have master/reference data and want to pull only related data from the source and hence you need some sort of lookup. There are around 30 transformation tasks available and this can be extended further with custom built tasks if needed.

Q13. What is a Task?

A task is very much like a method of any programming language which represents or carries out an individual unit of work. There are broadly two categories of tasks in SSIS, Control Flow tasks and Database Maintenance tasks. All Control Flow tasks are operational in nature except Data Flow tasks. Although there are around 30 control flow tasks which you can use in your package you can also develop your own custom tasks with your choice of .NET programming language.

Q14. What is a Precedence Constraint and what types of Precedence Constraint are there?

SSIS allows you to place as many as tasks you want to be placed in control flow. You can connect all these tasks using connectors called Precedence Constraints. Precedence Constraints allow you to define the logical sequence of tasks in the order they should be executed. You can also specify a condition to be evaluated before the next task in the flow is executed.

These are the types of precedence constraints and the condition could be either a constraint, an expression or both

Success (next task will be executed only when the last task completed successfully) or

Failure (next task will be executed only when the last task failed) or

Complete (next task will be executed no matter the last task was completed or failed).

Q15. What is a container and how many types of containers are there?

A container is a logical grouping of tasks which allows you to manage the scope of the tasks together.

These are the types of containers in SSIS:

Sequence Container - Used for grouping logically related tasks together

For Loop Container - Used when you want to have repeating flow in package

For Each Loop Container - Used for enumerating each object in a collection; for example a record set or a list of files.

Apart from the above mentioned containers, there is one more container called the Task Host Container which is not visible from the IDE, but every task is contained in it (the default container for all the tasks).

Q16. What are variables and what is variable scope?

A variable is used to store values. There are basically two types of variables, System Variable (like ErrorCode, ErrorDescription, PackageName etc) whose values you can use but cannot change and User Variable which you create, assign values and read as needed. A variable can hold a value of the data type you have chosen when you defined the variable.

Variables can have a different scope depending on where it was defined. For example you can have package level variables which are accessible to all the tasks in the package and there could also be container level variables which are accessible only to those tasks that are within the container.

Q17. What is the transaction support feature in SSIS?

When you execute a package, every task of the package executes in its own transaction. What if you want to execute two or more tasks in a single transaction? This is where the transaction support feature helps. You can group all your logically related tasks in single group. Next you can set the transaction property appropriately to enable a transaction so that all the tasks of the package run in a single transaction. This way you can ensure either all of the tasks complete successfully or if any of them fails, the transaction gets roll-backed too.

Q18. What properties do you need to configure in order to use the transaction feature in SSIS?

Suppose you want to execute 5 tasks in a single transaction, in this case you can place all 5 tasks in a Sequence Container and set the TransactionOption and IsolationLevel properties appropriately.

The TransactionOption property expects one of these three values:

Supported - The container/task does not create a separate transaction, but if the parent object has already initiated a transaction then participate in it

Required - The container/task creates a new transaction irrespective of any transaction initiated by the parent object

NotSupported - The container/task neither creates a transaction nor participates in any transaction initiated by the parent object

Isolation level dictates how two more transaction maintains consistency and concurrency when they are running in parallel. To learn more about Transaction and Isolation Level, refer to this tip.

Q19. When I enabled transactions in an SSIS package, it failed with this exception: "The Transaction Manager is not available. The DTC transaction failed to start." What caused this exception and how can it be fixed?

SSIS uses the MS DTC (Microsoft Distributed Transaction Coordinator) Windows Service for transaction support. As such, you need to ensure this service is running on the machine where you are actually executing the SSIS packages or the package execution will fail with the exception message as indicated in this question.

Q20. What is event handling in SSIS?

Like many other programming languages, SSIS and its components raise different events during the execution of the code. You can write an even handler to capture the event and handle it in a few different ways. For example consider you have a data flow task and before execution of this data flow task you want to make some environmental changes such as creating a table to write data into, deleting/truncating a table you want to write, etc. Along the same lines, after execution of the data flow task you want to cleanup some staging tables. In this circumstance you can write an event handler for the OnPreExcute event of the data flow task which gets executed before the actual execution of the data flow. Similar to that you can also write an event handler for OnPostExecute event of the data flow task which gets executed after the execution of the actual data flow task. Please note, not all the tasks raise the same events as others. There might be some specific events related to a specific task that you can use with one object and not with others.

Q21. How do you write an event handler?

First, open your SSIS package in Business Intelligence Development Studio (BIDS) and click on the Event Handlers tab. Next, select the executable/task from the left side combo-box and then select the event you want to write the handler in the right side combo box. Finally, click on the hyperlink to create the event handler. So far you have only created the event handler, you have not specified any sort of action. For that simply drag the required task from the toolbox on the event handler designer surface and configure it appropriately. To learn more about event handling, click here.

Q23. What is SSIS validation?

SSIS validates the package and all of it's tasks to ensure it has been configured correctly. With a given set of configurations and values, all the tasks and package will execute successfully. In other words, during the validation process, SSIS checks if the source and destination locations are accessible and the meta data about the source and destination tables are stored with the package are correct, so that the task will not fail if executed. The validation process reports warnings and errors depending on the validation failure detected. For example, if the source/destination tables/columns get changed/dropped it will show as error. Whereas if you are accessing more columns than used to write to the destination object this will be flagged as a warning. To learn about validation click here.

Q25. Define early validation (package level validation) versus late validation (component level validation).

When a package is executed, the package goes through the validation process. All of the components/tasks of package are validated before actually starting the package execution. This is called early validation or package level validation. During execution of a package, SSIS validates the component/task again before executing that particular component/task. This is called late validation or component level validation.

Q26. What is DelayValidation and what is the significance?

As I said before, during early validation all of the components of the package are validated along with the package itself. If any of the component/task fails to validate, SSIS will not start the package execution. In most cases this is fine, but what if the second task is dependent on the first task? For example, say you are creating a table in the first task and referring to the same table in the second task? When early validation starts, it will not be able to validate the second task as the dependent table has not been created yet. Keep in mind that early validation is performed before the package execution starts. So what should we do in this case? How can we ensure the package is executed successfully and the logically flow of the package is correct? This is where you can use the DelayValidation property. In the above scenario you should set the DelayValidation property of the second task to TRUE in which case early validation i.e. package level validation is skipped for that task and that task would only be validated during late validation i.e. component level validation. Please note using the DelayValidation property you can only skip early validation for that specific task, there is no way to skip late or component level validation.

Q27. How would you do Logging in SSIS?

Logging Configuration provides an inbuilt feature which can log the detail of various events like onError, onWarning etc to the various options say a flat file, SqlServer table, XML or SQL Profiler.

Q28. How would you do Error Handling?

A SSIS package could mainly have two types of errors

a) Procedure Error: Can be handled in Control flow through the precedence control and redirecting the execution flow.

b) Data Error: is handled in DATA FLOW TASK buy redirecting the data flow using Error Output of a component.

Q29. How to pass property value at Run time? How do you implement Package Configuration?

A property value like connection string for a Connection Manager can be passed to the package using package configurations. Package Configuration provides different options like XML File, Environment Variables, SQL Server Table, Registry Value or Parent package variable.

Q30. How would you deploy a SSIS Package on production?

1. Create deployment utility by setting its property as true.

2. It will be created in the bin folder of the solution as soon as package is build.

3. Copy all the files in the utility and use manifest file to deploy it on the Prod.

Q31. How would you pass a variable value to Child Package?

Very frequent Question which looks so complicated to programmers.

Actually passing a variable value to a child package is very trivial task. We can pass on the value by configuring parent variable in package configuration but there is an easy way of achieve this and the fact lies beneath the fundamental principle of Variable Scope.

If you call a Child package then it is like a container itself and all the variables defined in above hierarchy will be accessible in the Child package.

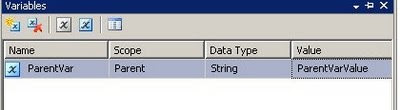

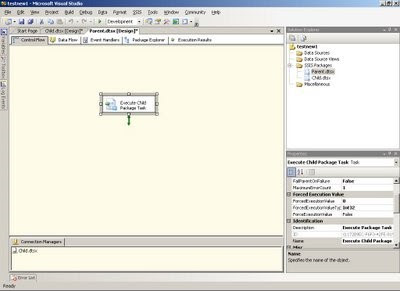

Let me show this with an example in which I will declare a variable "ParentVar" in my parent package and call a Child package which will access "ParentVar" and display in a msgbox.

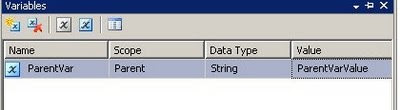

1) Parent: Create Parent Package and declare a variable "ParentVar"

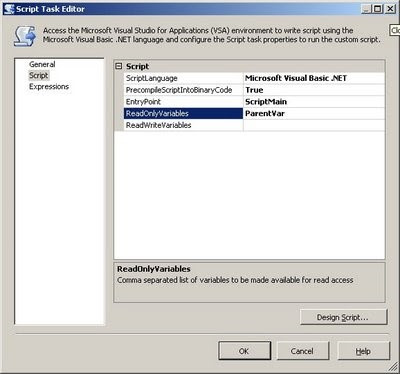

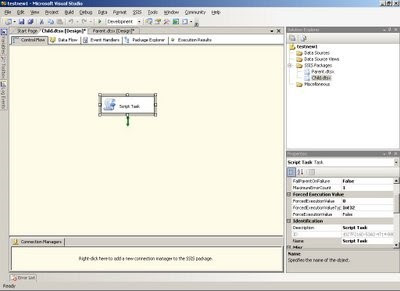

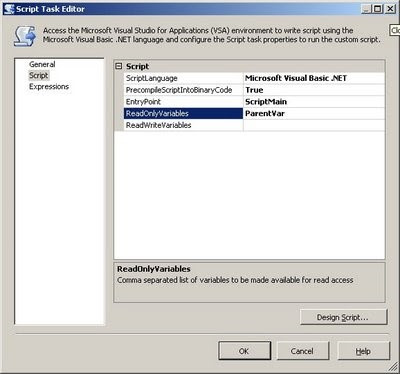

2) Child: Create a Child package and use a script task and define readonly variable as ParentVar

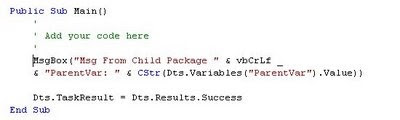

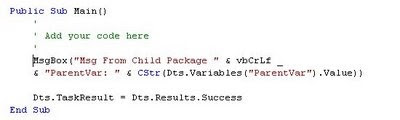

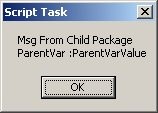

3) Child: Now in Script you can use ParentVar like any other variable.eg I am using to display it in a msgbox. I would suggest to create another child package variable and assign Parent package variable value to it and use it in the child package variable through out the package.

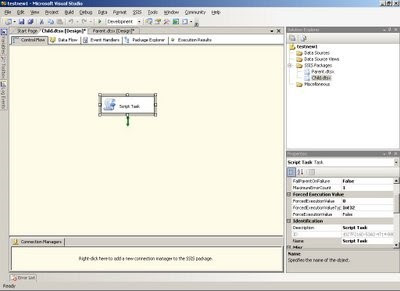

4) Child: Whole Child package will look like

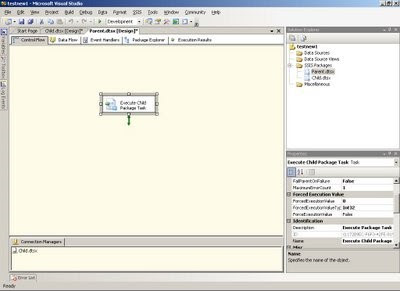

5) Parent: Now in parent package call the child package through Execute Package task.

The Parent package will look like

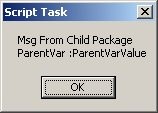

6) Result: Execute Parent package.it will in turn call child package and it will display msgbox

Here was a simple method to use parent package variable in Child package.

Q32. What are new features in SSIS 2008?

1). Improved Parallelism of Execution Trees:

The biggest performance improvement in the SSIS 2008 is incorporation of parallelism in the processing of execution tree. In SSIS 2005, each execution tree used a single thread whereas in SSIS 2008 , the Data flow engine is redesigned to utilize multiple threads and take advantage of dynamic scheduling to execute multiple components in parallel, including components within the same execution tree

2) Any .NET language for Scripting:

SSIS 2008 is incorporated with new Visual Studio Tool for Application(VSTA) scr

ipting engine. Advantage of VSTA is it enables user to use any .NET language for scripting.

3) New ADO.NET Source and Destination Component:

SSIS 2008 gets a new Source and Destination Component for ADO.NET Record sets.

4) Improved Lookup Transformation:

In SSIS 2008, the Lookup Transformation has faster cache loading and lookup operations. It has new caching options, including the ability for the reference dataset to use a cache file (.caw) accessed by the Cache Connection Manager. In addition same cache can be shared between multiple Lookup Transformations.

5) New Data Profiling Task:

SSIS 2008 has a new debugging aid Data Profiling Task that can help user analyze the data flows occurring in the package. In many cases, execution errors are caused by unexpected variations in the data that is being transferred. The Data Profiling Task can help users to discover the course of these errors by giving better visibility into the data flow.

6) New Connections Project Wizard:

One of the main usability enhancement to SSIS 2008 is the new Connections Project Wizard. The Connections Project Wizard guides user through the steps required to create source and destinations.

Q33. What are SSIS Connection Managers?

When we talk of integrating data, we are actually pulling data from different sources and writing it to a destination. But how do you get connected to the source and destination systems? This is where the connection managers come into the picture. Connection manager represent a connection to a system which includes data provider information, the server name, database name, authentication mechanism, etc.

Q34. What are a source and destination adapters?

A source adaptor basically indicates a source in Data Flow to pull data from. The source adapter uses a connection manager to connect to a source and along with it you can also specify the query method and query to pull data from the source.

Similar to a source adaptor, the destination adapter indicates a destination in the Data Flow to write data to. Again like the source adapter, the destination adapter also uses a connection manager to connect to a target system and along with that you also specify the target table and writing mode, i.e. write one row at a time or do a bulk insert as well as several other properties.

Please note, the source and destination adapters can both use the same connection manager if you are reading and writing to the same database.

Q35. What is the Data Path and how is it different from a Precedence Constraint?

Data Path is used in a Data Flow task to connect to different components of a Data Flow and show transition of the data from one component to another. A data path contains the meta information of the data flowing through it, such as the columns, data type, size, etc. When we talk about differences between the data path and precedence constraint; the data path is used in the data flow, which shows the flow of data. Whereas the precedence constraint is used in control flow, which shows control flow or transition from one task to another task.

Q36. What is a Data Viewer utility and what it is used for?

The data viewer utility is used in Business Intelligence Development Studio during development or when troubleshooting an SSIS Package. The data viewer utility is placed on a data path to see what data is flowing through that specific data path during execution. The data viewer utility displays rows from a single buffer at a time, so you can click on the next or previous icons to go forward and backward to display data. Check out the Data Viewer enhancements in SQL Server 2012.

Q37. What is an SSIS breakpoint? How do you configure it? How do you disable or delete it?

A breakpoint allows you to pause the execution of the package in Business Intelligence Development Studio during development or when troubleshooting an SSIS Package. You can right click on the task in control flow, click on Edit Breakpoint menu and from the Set Breakpoint window, you specify when you want execution to be halted/paused. For example OnPreExecute, OnPostExecute, OnError events, etc. To toggle a breakpoint, delete all breakpoints and disable all breakpoints go to the Debug menu and click on the respective menu item. You can event specify different conditions to hit the breakpoint as well.

Q38. What is SSIS event logging?

Like any other modern programming language, SSIS also raises different events during package execution life cycle. You can enable or write these events to trace the execution of your SSIS package and its tasks. You can also can write your custom message as a custom log. You can enable event logging at the package level as well as at the tasks level. You can also choose any specific event of a task or a package to be logged. This is essential when you are troubleshooting your package and trying to understand a performance problem or root cause of a failure.

Q39. What are the different SSIS log providers?

There are several places where you can log execution data generated by an SSIS event log:

SSIS log provider for Text files

SSIS log provider for Windows Event Log

SSIS log provider for XML files

SSIS log provider for SQL Profiler

SSIS log provider for SQL Server, which writes the data to the msdb..sysdtslog90 or msdb..sysssislog table depending on the SQL Server version.

Q40. How do you enable SSIS event logging?

SSIS provides a granular level of control in deciding what to log and where to log. To enable event logging for an SSIS Package, right click in the control flow area of the package and click on Logging. In the Configure SSIS Logs window you will notice all the tasks of the package are listed on the left side of the tree view. You can specifically choose which tasks you want to enable logging. On the right side you will notice two tabs; on the Providers and Logs tab you specify where you want to write the logs, you can write it to one or more log providers together. On the Details tab you can specify what events do you want to log for the selected task.

Please note, enabling event logging is immensely helpful when you are troubleshooting a package, but also incurs additional overhead on SSIS in order to log the events and information. Hence you should only enabling event logging when needed and only choose events which you want to log. Avoid logging all the events unnecessarily.

Q41. What is the LoggingMode property?

SSIS packages and all of the associated tasks or components have a property called LoggingMode. This property accepts three possible values: Enabled - to enable logging of that component, Disabled - to disable logging of that component and UseParentSetting - to use parent's setting of that component to decide whether or not to log the data.

Q42. How is SSIS runtime engine different from the SSIS dataflow pipeline engine?

The SSIS Runtime Engine manages the workflow of the packages during runtime, which means its role is to execute the tasks in a defined sequence. As you know, you can define the sequence using precedence constraints. This engine is also responsible for providing support for event logging, breakpoints in the BIDS designer, package configuration, transactions and connections. The SSIS Runtime engine has been designed to support concurrent/parallel execution of tasks in the package.

The Dataflow Pipeline Engine is responsible for executing the data flow tasks of the package. It creates a dataflow pipeline by allocating in-memory structure for storing data in-transit. This means, the engine pulls data from source, stores it in memory, executes the required transformation in the data stored in memory and finally loads the data to the destination. Like the SSIS runtime engine, the Dataflow pipeline has been designed to do its work in parallel by creating multiple threads and enabling them to run multiple execution trees/units in parallel.

Q43. How is a synchronous (non-blocking) transformation different from an asynchronous (blocking) transformation in SQL Server Integration Services?

A transformation changes the data in the required format before loading it to the destination or passing the data down the path. The transformation can be categorized in Synchronous and Asynchronous transformation.

A transformation is called synchronous when it processes each incoming row (modify the data in required format in place only so that the layout of the result-set remains same) and passes them down the hierarchy/path. It means, output rows are synchronous with the input rows (1:1 relationship between input and output rows) and hence it uses the same allocated buffer set/memory and does not require additional memory. Please note, these kinds of transformations have lower memory requirements as they work on a row-by-row basis (and hence run quite faster) and do not block the data flow in the pipeline. Some of the examples are : Lookup, Derived Columns, Data Conversion, Copy column, Multicast, Row count transformations, etc.

A transformation is called Asynchronous when it requires all incoming rows to be stored locally in the memory before it can start producing output rows. For example, with an Aggregate Transformation, it requires all the rows to be loaded and stored in memory before it can aggregate and produce the output rows. This way you can see input rows are not in sync with output rows and more memory is required to store the whole set of data (no memory reuse) for both the data input and output. These kind of transformations have higher memory requirements (and there are high chances of buffer spooling to disk if insufficient memory is available) and generally runs slower. The asynchronous transformations are also called "blocking transformations" because of its nature of blocking the output rows unless all input rows are read into memory. To learn more about it click here.

Q44. What is the difference between a partially blocking transformations versus a fully blocking transformation in SQL Server Integration Services?

Asynchronous transformations, as discussed in last question, can be further divided in two categories depending on their blocking behavior:

Partially Blocking Transformations do not block the output until a full read of the inputs occur. However, they require new buffers/memory to be allocated to store the newly created result-set because the output from these kind of transformations differs from the input set. For example, Merge Join transformation joins two sorted inputs and produces a merged output. In this case if you notice, the data flow pipeline engine creates two input sets of memory, but the merged output from the transformation requires another set of output buffers as structure of the output rows which are different from the input rows. It means the memory requirement for this type of transformations is higher than synchronous transformations where the transformation is completed in place.

Full Blocking Transformations, apart from requiring an additional set of output buffers, also blocks the output completely unless the whole input set is read. For example, the Sort Transformation requires all input rows to be available before it can start sorting and pass down the rows to the output path. These kind of transformations are most expensive and should be used only as needed. For example, if you can get sorted data from the source system, use that logic instead of using a Sort transformation to sort the data in transit/memory. To learn more about it click here.

Q45. What is an SSIS execution tree and how can I analyze the execution trees of a data flow task?

The work to be done in the data flow task is divided into multiple chunks, which are called execution units, by the dataflow pipeline engine. Each represents a group of transformations. The individual execution unit is called an execution tree, which can be executed by separate thread along with other execution trees in a parallel manner. The memory structure is also called a data buffer, which gets created by the data flow pipeline engine and has the scope of each individual execution tree. An execution tree normally starts at either the source or an asynchronous transformation and ends at the first asynchronous transformation or a destination. During execution of the execution tree, the source reads the data, then stores the data to a buffer, executes the transformation in the buffer and passes the buffer to the next execution tree in the path by passing the pointers to the buffers. To learn more about it click here.

To see how many execution trees are getting created and how many rows are getting stored in each buffer for a individual data flow task, you can enable logging of these events of data flow task: PipelineExecutionTrees, PipelineComponentTime, PipelineInitialization, BufferSizeTunning, etc. To learn more about events that can be logged click here.

Q46. How can an SSIS package be scheduled to execute at a defined time or at a defined interval per day?

You can configure a SQL Server Agent Job with a job step type of SQL Server Integration Services Package, the job invokes the dtexec command line utility internally to execute the package. You can run the job (and in turn the SSIS package) on demand or you can create a schedule for a one time need or on a reoccurring basis. Refer to this tip to learn more about it.

Q47. What is an SSIS Proxy account and why would you create it?

When we try to execute an SSIS package from a SQL Server Agent Job it fails with the message "Non-SysAdmins have been denied permission to run DTS Execution job steps without a proxy account". This error message is generated if the account under which SQL Server Agent Service is running and the job owner is not a sysadmin on the instance or the job step is not set to run under a proxy account associated with the SSIS subsystem. Refer tothis tip to learn more about it.

Q48. How can you configure your SSIS package to run in 32-bit mode on 64-bit machine when using some data providers which are not available on the 64-bit platform?

In order to run an SSIS package in 32-bit mode the SSIS project property Run64BitRuntime needs to be set to "False". The default configuration for this property is "True". This configuration is an instruction to load the 32-bit runtime environment rather than 64-bit, and your packages will still run without any additional changes. The property can be found under SSIS Project Property Pages -> Configuration Properties -> Debugging.

Q49. What do you mean by Microsoft Business Intelligence and what components of SQL Server supports this?

Microsoft defines its BI solution as a platform to provide better and accurate information in a easily understandable format for quicker and better decision making. It consists of BI tools from SQL Server, SQL Server Integration Services (SSIS), SQL Server Analysis Services (SSAS), SQL Server Reporting Services (SSRS), Microsoft SharePoint and its Office products.

Q50. What is Breakpoint and Checkpoint in SSIS Package?

§ Breakpoints in SSIS packages enables us to review the values of the variables, or other components of an SSIS package.

§ Checkpoints in SSIS packages enables us to rerun a SSIS package from the point of failure, so that you do not have to rerun that portion of the package which was successfully run.

Q51. What is the various tabs available in a SSIS Project at Design time?

§ Control Flow.

§ Data Flow.

§ Event Handlers.

§ Package Explorer.

Q52. What are some of the events on which you can add an Event Handler in an SSIS Package?

§ OnError

§ OnPostExecute

§ OnProgress

§ OnTaskFailed

§ OnWarning

Q53. I need to have more than 1 destination in a Data Flow task, how can that be achieved?

Using Multicast Data Flow Transformation, it is possible to direct the output to more than 1 destination.

Q54. How to you deploy an SSIS Package?

Q55. What are the different destinations SSIS packages can be saved / stored for deployment?

SSIS packages can be saved inside the SQL Server or File System destination.

Q56. Where inside a SQL Server are the SSIS stored?

SSIS packages are stored inside MSDB database.

Q57. How does Error-Handling work in SSIS?

When a data flow component applies a transformation to column data, extracts data from sources, or loads data into destinations, errors can occur. Errors frequently occur because of unexpected data values.

Type of typical Errors in SSIS:

-Data Connection Errors, which occur incase the connection manager cannot be initialized with the connection string. This applies to both Data Sources and Data Destinations along with Control Flows that use the Connection Strings.

-Data Transformation Errors, which occur while data is being transformed over a Data Pipeline from Source to Destination.

-Expression Evaluation errors, which occur if expressions that are evaluated at run time perform invalid

Q58. What is environment variable in SSIS?

An environment variable configuration sets a package property equal to the value in an environment variable.

Environmental configurations are useful for configuring properties that are dependent on the computer that is executing the package.

Q59: What are the Transformations available in SSIS?

AGGREGATE - It applies aggregate functions to Record Sets to produce new output records from aggregated values.

AUDIT - Adds Package and Task level Metadata - such as Machine Name, Execution Instance, Package Name, Package ID, etc..

CHARACTERMAP - Performs SQL Server column level string operations such as changing data from lower case to upper case.

CONDITIONALSPLIT– Separates available input into separate output pipelines based on Boolean Expressions configured for each output.

COPYCOLUMN - Add a copy of column to the output we can later transform the copy keeping the original for auditing.

DATACONVERSION - Converts columns data types from one to another type. It stands for Explicit Column Conversion.

DATAMININGQUERY– Used to perform data mining query against analysis services and manage Predictions Graphs and Controls.

DERIVEDCOLUMN - Create a new (computed) column from given expressions.

EXPORTCOLUMN– Used to export a Image specific column from the database to a flat file.

FUZZYGROUPING– Used for data cleansing by finding rows that are likely duplicates.

FUZZYLOOKUP - Used for Pattern Matching and Ranking based on fuzzy logic.

IMPORTCOLUMN - Reads image specific column from database onto a flat file.

LOOKUP - Performs the lookup (searching) of a given reference object set against a data source. It is used for exact matches only.

MERGE - Merges two sorted data sets into a single data set into a single data flow.

MERGEJOIN - Merges two data sets into a single dataset using a join junction.

MULTICAST - Sends a copy of supplied Data Source onto multiple Destinations.

ROWCOUNT - Stores the resulting row count from the data flow / transformation into a variable.

ROWSAMPLING - Captures sample data by using a row count of the total rows in dataflow specified by rows or percentage.

UNIONALL - Merge multiple data sets into a single dataset.

PIVOT– Used for Normalization of data sources to reduce analomolies by converting rows into columns

UNPIVOT– Used for denormalizing the data structure by converts columns into rows incase of building Data Warehouses.

Q60. How to log SSIS Executions?

SSIS includes logging features that write log entries when run-time events occur and can also write custom messages. This is not enabled by default. Integration Services supports a diverse set of log providers, and gives you the ability to create custom log providers. The Integration Services log providers can write log entries to text files, SQL Server Profiler, SQL Server, Windows Event Log, or XML files. Logs are associated with packages and are configured at the package level. Each task or container in a package can log information to any package log. The tasks and containers in a package can be enabled for logging even if the package itself is not.

Q61. How do you deploy SSIS packages?

SSIS Project BUILD provides a Deployment Manifest File. We need to run the manifest file and decide whether to deploy this onto File System or onto SQL Server [ msdb]. SQL Server Deployment is very faster and more secure then File System Deployment. Alternatively, we can also import the package from SSMS from File System or SQL Server.

Q62: What are variables and what is variable scope?

Variables store values that a SSIS package and its containers, tasks, and event handlers can use at run time. The scripts in the Script task and the Script component can also use variables. The precedence constraints that sequence tasks and containers into a workflow can use variables when their constraint definitions include expressions. Integration Services supports two types of variables: user-defined variables and system variables. User-defined variables are defined by package developers, and system variables are defined by Integration Services. You can create as many user-defined variables as a package requires, but you cannot create additional system variables.

Q63. What are the different types of Data flow components in SSIS?

There are 3 data flow components in SSIS.

1. Sources

2. Transformations

3. Destinations

Q64. Explain Audit Transformation?

It allows you to add auditing information as required in auditing world specified by HIPPA and Sarbanes-Oxley (SOX). Auditing options that you can add to transformed data through this transformation are :

1. Execution of Instance GUID : ID of execution instance of the package

2. PackageID : ID of the package

3. PackageName

4.VersionID : GUID version of the package

5. Execution StartTime

6.MachineName

7.UserName

8.TaskName

9.TaskID : uniqueidentifier type of the data flow task that contains audit transformation

Q65. Explain Copy column Transformation?

This component simply copies a column to another new column. Just like ALIAS Column in T-Sql.

Q66. Explain Derived column Transformation?

Derived column creates new column or put manipulation of several columns into new column. You can directly copy existing or create a new column using more than one column also.

Q67. Explain Multicast Transformation?

This transformation sends output to multiple output paths with no conditional as Conditional Split does. Takes ONE Input and makes the COPY of data and passes the same data through many Outputs. In simple give one input and take many outputs of the same data.

Q68.What is a Task?

A task is very much like a method of any programming language which represents or carries out an individual unit of work. There are broadly two categories of tasks in SSIS, Control Flow tasks and Database Maintenance tasks. All Control Flow tasks are operational in nature except Data Flow tasks. Although there are around 30 control flow tasks which you can use in your package you can also develop your own custom tasks with your choice of .NET programming language

Q69.What is a workflow in SSIS ?

Workflow is a set of instructions on to specify the Program Executor on how to execute tasks and containers within SSIS Packages

Q70.What is the Control Flow?

When you start working with SSIS, you first create a package which is nothing but a collection of tasks or package components. The control flow allows you to order the workflow, so you can ensure tasks/components get executed in the appropriate order

Q71.What is a Transformation?

A transformation simply means bringing in the data in a desired format. For example you are pulling data from the source and want to ensure only distinct records are written to the destination, so duplicates are removed. Anther example is if you have master/reference data and want to pull only related data from the source and hence you need some sort of lookup. There are around 30 transformation tasks available and this can be extended further with custom built tasks if needed.

Q72. How many difference source and destinations have you used?

It is very common to get all kinds of sources so the more the person worked with the better for you. Common ones are SQL Server, CSV/TXT, Flat Files, Excel, Access, Oracle, MySQL but also Salesforce, web data scrapping.

Q73. Difference between Control Flow and Data Flow

1.Control flow consists of one or more tasks and containers that execute when the package runs. We use precedence constraints to connect the tasks and containers in a package. SSIS provides three different types of control flow elements:

Containers that provide structures in packages,

Tasks that provide functionality, and

Precedence Constraints that connect the executables, containers, and tasks into an ordered control flow.

2.Control flow does not move data from task to task.

3.Tasks are run in series if connected with precedence or in parallel.

1. A Data flow consists of the sources and destinations that extract and load data, the transformations that modify and extend data, and the paths that link sources, transformations, and destinations. The Data Flow task is the executable within the SSIS package that creates, orders, and runs the data flow. Data Sources, Transformations, and Data Destinations are the three important categories in the Data Flow.

2. Data flows move data, but there are also tasks in the control flow, as such, their success or Failure effects how your control flow operates

3. Data is moved and manipulated through transformations. 4. Data is passed between each component in the data flow.

Q74. What are the different types of Transformations you have worked?

AGGEGATE: The Aggregate transformation applies aggregate functions to column values and copies the results to the transformation output. Besides aggregate functions, the transformation provides the GROUP BY clause, which you can use to specify groups to aggregate across.

The Aggregate Transformation supports following operations:

Group By, Sum, Average,

Count, Count Distinct, Minimum, Maximum

AUDIT: Adds Package and Task level Metadata - such as Machine Name, Execution Instance, Package Name, Package ID, etc.

CHARACTER MAP: When it comes to string formatting in SSIS, Character Map transformation is very useful, used to convert data lower case, upper case.

CONDITIONAL SPLIT: used to split the input source data based on condition.

COPY COLUMN: Add a copy of column to the output, we can later transform the copy keeping the original for auditing.

DATA CONVERSION: Converts columns data types from one to another type. It stands for Explicit Column Conversion.

DATA MINING QUERY: Used to perform data mining query against analysis services and manage Predictions Graphs and Controls.

DERIVED COLUMN: Create a new (computed) column from given expressions.

EXPORT COLUMN: Used to export Image specific column from the database to a flat file.

FUZZY GROUPING: Groups the rows in the dataset that contain similar values.

FUZZY LOOKUP: Used for Pattern Matching and Ranking based on fuzzy logic.

IMPORT COLUMN: Reads image specific column from database onto a flat file.

LOOKUP: Performs the lookup (searching) of a given reference object set to a data source. It is used to find exact matches only. MERGE - Merges two sorted data sets of same column structure into a single output.

MERGE JOIN: Merges two sorted data sets into a single dataset using a join.

MULTI CAST: is used to create/distribute exact copies of the source dataset to one or more destination datasets.

ROW COUNT: Stores the resulting row count from the data flow / transformation into a variable.

ROW SAMPLING - Captures sample data by using a row count of the total rows in dataflow specified by rows or percentage.

UNION ALL: Merge multiple data sets into a single dataset.

PIVOT: Used for Normalization of data sources to reduce analomolies by converting rows into columns

UNPIVOT: Used for demoralizing the data structure by converts columns into rows in case of building Data Warehouses.

Q75. What are Row Transformations, Partially Blocking Transformation, Fully Blocking Transformation with examples.

In Row Transformation, each value is manipulated individually. In this transformation, the buffers can be re-used for other purposes like following: OLEDB Data source, OLEDB Data Destinations, Other Row transformation within the package, Other partially blocking transformations within the package. Examples: Copy Column, Audit, Character Map

Partially Blocking Transformation:

These can re-use the buffer space allocated for available Row transformation and get new buffer space allocated exclusively for Transformation.

Example: Merge, Conditional Split, Multicast, Lookup, Import, Export Column

Fully Blocking Transformation:

It will make use of their own reserve buffer and will not share buffer space from other transformation or connection manager.

Example: Sort, Aggregate, Cache Transformation

Q76. Difference between Merge and UnionAll Transformations?

The Union All transformation combines multiple inputs into one output. The transformation inputs are added to the transformation output one after the other; no reordering of rows occurs.

Merge Transformations combine’s two sorted data sets of same column structure into a single output. The rows from each dataset are inserted into the output based on values in their key columns.

The Merge transformation is similar to the Union All transformations.

Use the Union All transformation instead of the Merge transformation in the following situations: -The Source Input rows are not need to be sorted.

-The combined output does not need to be sorted.

-when we have more than 2 source inputs.

Q77. Multicast, Conditional Split, Bulk Insert Tasks

Multicast Transformation is used to extract output from single source and places onto multiple destinations.

Conditional Split transformation is used for splitting the input data based on a specific condition. The condition is evaluated in VB Script.

Multicast Transformation generates exact copies of the source data, it means each recipient will have same number of records as the source whereas the Conditional Split Transformation divides the source data based on the defined conditions and if no rows match with this defined conditions those rows are put on default output.

Bulk Insert Task is used to copy the large volume of data from text file to sql server destination.

Q78. What is the RetainSameConnection property and what is its impact?

Whenever a task uses a connection manager to connect to source or destination database, a connection is opened and closed with the execution of that task. Sometimes you might need to open a connection, execute multiple tasks and close it at the end of the execution. This is where RetainSameConnection property of the connection manager might help you. When you set this property to TRUE, the connection will be opened on first time it is used and remain open until execution of the package completes.

Q79. Difference between Synchronous and Asynchronous Transformation

Synchronous T/F process the input rows and passes them onto the data flow one row at a time. Synchronous Transformation cannot create new buffer.

When the output buffer of Transformation creates a new buffer, then it is Asynchronous transformation. Output buffer or output rows are not sync with input buffer.

Web Service task let us execute web services.

· First we configure HTTP Connection manager which will point to WSDL of a web service.

· Web service task uses this HTTP Connection manager and let us invoke methods in it.

· Return values of method value we can store it in some variables and can use as input for some other tasks.

Object means table, stored procedures, user defined functions etc.

Q89. Logging. Different types of Logging files?

Logging is used to log the information during the execution of package.

A log provider can be a text file, the SQL Server Profiler, a SQL Server relational database, a Windows event log, or an XML file. If necessary, you can define a custom log provider (e.g., a proprietary file format).

Cache Transformation

Q142. I have a source file that contains 1000 records, I want to insert 15% records in TableA and remaining in TableB which transformation I can use?

Percentage Sampling

Q143. There is no Union Transformation in SSIS, How to perform UNION operation by using built-in Transformation?

Q144. Before you create your SSIS Package and load data into destination, you want to analyze your data, which task will help you to achieve that?

Data Profiling Task

Q145. In Merge Join Transformation, we can use Inner Join, Left Join and Full Outer Join; Which Transformation is used to perform Cross Join.

Workaround is to add the Derived Column Transformation to add a new column to each of the two sources that you want to join (call the columns [JoinCol]) and put a literal value (e.g. "1") into that column for every single row. In the Merge Join transform, simply join on the two [JoinCol] columns.

Q146. Direct Vs. Indirect Configuration in SSIS

Direct configuration Pros:

-Doesn't need environment variables creation or maintenance

-Scale well when multiple databases (Test or DEV) are used on the same server

-Changes can be made to the configurations files (.dtsconfig) when deployment is made using SSIS deployment utility

Cons:

-Need to specify configuration file that we want to use when the package is triggered with DTExec (/conf switch).

-If multiple layers of packages are used (parent/child packages), need to transfer configured values from the parent to the child package using parent packages variables which can be tricky (if one parent variable is missing, the rest of the parent package configs (parameters) will not be transferred).

Indirect configuration Pros:

-All packages can reference the configuration file(s) via environment variable

-Packages can be deployed simply using copy/paste or xcopy, no need to mess with SSIS deployment utility

-Packages or application is not dependent of configuration switches when triggered with DTExec utility (command line is much simpler)

Cons:

-Require environment variables to be created -Does not support easily multiple databases (TEST and Pre-Prod) to be used on the same server

Q147. We get the data from flat file and how to remove Leading Zero, Trailing Zeros OR Leading and trailing both before insert into destination.

Use the Derived column Transformation to remove Leading/Trailing OR Both zero from the string. After removing Zeros you can Cast to Any data type you want such as Numeric, Int, float etc.

Leading Zeros: (DT_WSTR,50)(DT_I8)[YourInputColumn]

Trailing Zeros: REVERSE((DT_WSTR,50)(DT_I8)REVERSE([YourInputColumn])) Leading and Trailing Zeros: REVERSE((DT_WSTR,50)(DT_I8)REVERSE((DT_WSTR,50)(DT_I8)[YourInputColumn]))

Q148. Import Data in SSMS:

We can't apply transformations on source data with "Import Data", "Export Data".

Import and Export Wizard in SSIS: We can apply transformations on source data

Q149. How do I troubleshoot SSIS packages failed execution in SQL Agent job?

When you see a SSIS package fails running in a SQL Agent job, you need to first consider the following conditions:

1. The user account that is used to run the package under SQL Server Agent differs from the original package author.

2. The user account does not have the required permissions to make connections or to access resources outside the SSIS package. The following 4 issues are common encountered in the SSIS forum.

1. The package's Protection Level is set to EncryptSensitiveWithUserKey but your SQL Server Agent service account is different from the SSIS package creator.

2. Data source connection issue.

3. File or registry access permission issue.

4. No 64-bit driver issue. Package Protection Level issue: For the 1st issue, you can follow the following steps to troubleshoot this issue: 1. Check what the Protection Level is in your SSIS package.

2. If the Protection Level is set to EncryptSensitiveWithUserKey, check the Creator in your SSIS package and compare it with the SQL Server Agent Service account.

3. If the Creator is different from the SQL Server Agent Service account, then the sensitive data of the SSIS package could not be correctly decrypted, which will lead to the failure. A common solution to this issue is that you create a proxy account for SSIS in SQL Server Agent and then specify the proxy account as the "Run as" account in the job step. The proxy account must be the same as the SSIS package creator. You can also change the SSIS package protection level to EncryptSensitiveWithPassword and specify the password in the command line in the job step. Data Source Connection Issue: It happens when you are using Windows Authentication for your data source. In this case, you need to make sure that the SQL Server Agent Services service account or your Proxy account has the permission to access your database.

Q150. How to run SSIS Packages using 32-bit drivers on 64-bit machine?

On 64 Operating System when you install Integration Services it will install 32-Bit and 64-Bit version of DTExec commandline tool which is used to execute SSIS packages. If your SSIS package is referencing any 32-Bit DLL or 32-Bit drivers from your package then you must use 32-Bit version of DTExec to execute SSIS package. If you have reference to any 32-Bit driver/dll then make sure you change Project Property Run64BitRuntime to False before you Debug your package in BIDS otherwise your package will try to load 64-Bit dlls instead of 32-Bit.

To change this setting - Right click on Project Node - Under Debugging option, set Run64BitRuntime=False

SQL Server Integration Services (SSIS) is a component of SQL Server which can be used to perform a wide range of Data Migration and ETL operations. SSIS is a component in MSBI process of SQL Server.

This is a platform for Integration and Workflow applications. It is known for a fast and flexible OLTP and OLAP extensions used for data extraction, transformation, and loading (ETL). The tool may also be used to automate maintenance of SQL Server databases and multidimensional data sets.

Q2: What are the tools associated with SSIS?

We use Business Intelligence Development Studio (BIDS) OR SQL Server Development Tools (SSDT) and SQL Server Management Studio (SSMS) to work with Development of SSIS Projects.

We use SSMS to manage the SSIS Packages and Projects.

Q3: What is the difference between BIDS and SSDT?

Q4: What are the differences between DTS and SSIS?

Data Transformation Services

SQL Server Integration Services

Limited Error Handling

Complex and powerful Error Handling

Message Boxes in ActiveX Scripts

Message Boxes in .NET Scripting

No Deployment Wizard

Interactive Deployment Wizard

Limited Set of Transformation

Good number of Transformations

NO BI functionality

Complete BI Integration

Q5. Explain architecture of SSIS?

SSIS architecture consists of four key parts:

a) Integration Services service: monitors running Integration Services packages and manages the storage of packages.

b) Integration Services object model: includes managed API for accessing Integration Services tools, command-line utilities, and custom applications.

c) Integration Services runtime and run-time executables: it saves the layout of packages, runs packages, and provides support for logging, breakpoints, configuration, connections, and transactions. The Integration Services run-time executables are the package, containers, tasks, and event handlers that Integration Services includes, and custom tasks.

d) Data flow engine: provides the in-memory buffers that move data from source to destination.

Q6: What is a workflow in SSIS 2014?

Workflow is a set of instructions on to specify the Program Executor on how to execute tasks and containers within SSIS Packages.

Q7. What is an SSIS Package?

SSIS package is a collection of variety of tasks to perform the Extract, Transform and Load data. Though the primary functionality of SSIS packages is for ETL, they can be used for other maintenance tasks such as database backups, Index rebuild, delete old backups etc.

Q8. How do you create SSIS Packages? OR What tools do you use for creating SSIS Packages?

You can create SSIS packages using Business Intelligence Development Studio in short “BIDS”. It can be installed using the same SQL Server installation media that is used to Install SQL Server. If all the features are selected when choosing the features at the time of installation, then BIDS is installed along with Management Studio, Books Online and other tools..

Q9: What is the control flow?

A control flow consists of one or more tasks and containers that execute when the package runs. To control order or define the conditions for running the next task or container in the package control flow, we use precedence constraints to connect the tasks and containers in a package. A subset of tasks and containers can also be grouped and run repeatedly as a unit within the package control flow. SQL Server Integration Services (SSIS) provides three different types of control flow elements: Containers that provide structures in packages, Tasks that provide functionality, and Precedence Constraints that connect the executables, containers, and tasks into an ordered control flow.

Q10: What is the data flow?

Data flow consists of the sources and destinations that extract and load data, the transformations that modify and extend data, and the paths that link sources, transformations, and destinations The Data Flow task is the executable within the SSIS package that creates, orders, and runs the data flow. A separate instance of the data flow engine is opened for each Data Flow task in a package. Data Sources, Transformations, and Data Destinations are the three important categories in the Data Flow.

Q11. What is the Data Flow Engine?

The Data Flow Engine, also called the SSIS pipeline engine, is responsible for managing the flow of data from the source to the destination and performing transformations (lookups, data cleansing etc.). Data flow uses memory oriented architecture, called buffers, during the data flow and transformations which allows it to execute extremely fast. This means the SSIS pipeline engine pulls data from the source, stores it in buffers (in-memory), does the requested transformations in the buffers and writes to the destination. The benefit is that it provides the fastest transformation as it happens in memory and we don't need to stage the data for transformations in most cases.

Q12. What is a Transformation?

A transformation simply means bringing in the data in a desired format. For example you are pulling data from the source and want to ensure only distinct records are written to the destination, so duplicates are removed. Anther example is if you have master/reference data and want to pull only related data from the source and hence you need some sort of lookup. There are around 30 transformation tasks available and this can be extended further with custom built tasks if needed.

Q13. What is a Task?

A task is very much like a method of any programming language which represents or carries out an individual unit of work. There are broadly two categories of tasks in SSIS, Control Flow tasks and Database Maintenance tasks. All Control Flow tasks are operational in nature except Data Flow tasks. Although there are around 30 control flow tasks which you can use in your package you can also develop your own custom tasks with your choice of .NET programming language.

Q14. What is a Precedence Constraint and what types of Precedence Constraint are there?

SSIS allows you to place as many as tasks you want to be placed in control flow. You can connect all these tasks using connectors called Precedence Constraints. Precedence Constraints allow you to define the logical sequence of tasks in the order they should be executed. You can also specify a condition to be evaluated before the next task in the flow is executed.

These are the types of precedence constraints and the condition could be either a constraint, an expression or both

Success (next task will be executed only when the last task completed successfully) or

Failure (next task will be executed only when the last task failed) or

Complete (next task will be executed no matter the last task was completed or failed).

Q15. What is a container and how many types of containers are there?

A container is a logical grouping of tasks which allows you to manage the scope of the tasks together.

These are the types of containers in SSIS:

Sequence Container - Used for grouping logically related tasks together

For Loop Container - Used when you want to have repeating flow in package

For Each Loop Container - Used for enumerating each object in a collection; for example a record set or a list of files.

Apart from the above mentioned containers, there is one more container called the Task Host Container which is not visible from the IDE, but every task is contained in it (the default container for all the tasks).

Q16. What are variables and what is variable scope?

A variable is used to store values. There are basically two types of variables, System Variable (like ErrorCode, ErrorDescription, PackageName etc) whose values you can use but cannot change and User Variable which you create, assign values and read as needed. A variable can hold a value of the data type you have chosen when you defined the variable.

Variables can have a different scope depending on where it was defined. For example you can have package level variables which are accessible to all the tasks in the package and there could also be container level variables which are accessible only to those tasks that are within the container.

Q17. What is the transaction support feature in SSIS?

When you execute a package, every task of the package executes in its own transaction. What if you want to execute two or more tasks in a single transaction? This is where the transaction support feature helps. You can group all your logically related tasks in single group. Next you can set the transaction property appropriately to enable a transaction so that all the tasks of the package run in a single transaction. This way you can ensure either all of the tasks complete successfully or if any of them fails, the transaction gets roll-backed too.

Q18. What properties do you need to configure in order to use the transaction feature in SSIS?

Suppose you want to execute 5 tasks in a single transaction, in this case you can place all 5 tasks in a Sequence Container and set the TransactionOption and IsolationLevel properties appropriately.

The TransactionOption property expects one of these three values:

Supported - The container/task does not create a separate transaction, but if the parent object has already initiated a transaction then participate in it

Required - The container/task creates a new transaction irrespective of any transaction initiated by the parent object

NotSupported - The container/task neither creates a transaction nor participates in any transaction initiated by the parent object

Isolation level dictates how two more transaction maintains consistency and concurrency when they are running in parallel. To learn more about Transaction and Isolation Level, refer to this tip.

Q19. When I enabled transactions in an SSIS package, it failed with this exception: "The Transaction Manager is not available. The DTC transaction failed to start." What caused this exception and how can it be fixed?

SSIS uses the MS DTC (Microsoft Distributed Transaction Coordinator) Windows Service for transaction support. As such, you need to ensure this service is running on the machine where you are actually executing the SSIS packages or the package execution will fail with the exception message as indicated in this question.

Q20. What is event handling in SSIS?

Like many other programming languages, SSIS and its components raise different events during the execution of the code. You can write an even handler to capture the event and handle it in a few different ways. For example consider you have a data flow task and before execution of this data flow task you want to make some environmental changes such as creating a table to write data into, deleting/truncating a table you want to write, etc. Along the same lines, after execution of the data flow task you want to cleanup some staging tables. In this circumstance you can write an event handler for the OnPreExcute event of the data flow task which gets executed before the actual execution of the data flow. Similar to that you can also write an event handler for OnPostExecute event of the data flow task which gets executed after the execution of the actual data flow task. Please note, not all the tasks raise the same events as others. There might be some specific events related to a specific task that you can use with one object and not with others.

Q21. How do you write an event handler?

First, open your SSIS package in Business Intelligence Development Studio (BIDS) and click on the Event Handlers tab. Next, select the executable/task from the left side combo-box and then select the event you want to write the handler in the right side combo box. Finally, click on the hyperlink to create the event handler. So far you have only created the event handler, you have not specified any sort of action. For that simply drag the required task from the toolbox on the event handler designer surface and configure it appropriately. To learn more about event handling, click here.

Q22. What is the DisableEventHandlers property used for?

Consider you have a task or package with several event handlers, but for some reason you do not want event handlers to be called. One simple solution is to delete all of the event handlers, but that would not be viable if you want to use them in the future. This is where you can use the DisableEventHandlers property. You can set this property to TRUE and all event handlers will be disabled. Please note with this property you simply disable the event handlers and you are not actually removing them. This means you can set this value to FALSE and the event handlers will once again be executed.

Consider you have a task or package with several event handlers, but for some reason you do not want event handlers to be called. One simple solution is to delete all of the event handlers, but that would not be viable if you want to use them in the future. This is where you can use the DisableEventHandlers property. You can set this property to TRUE and all event handlers will be disabled. Please note with this property you simply disable the event handlers and you are not actually removing them. This means you can set this value to FALSE and the event handlers will once again be executed.

Q23. What is SSIS validation?

SSIS validates the package and all of it's tasks to ensure it has been configured correctly. With a given set of configurations and values, all the tasks and package will execute successfully. In other words, during the validation process, SSIS checks if the source and destination locations are accessible and the meta data about the source and destination tables are stored with the package are correct, so that the task will not fail if executed. The validation process reports warnings and errors depending on the validation failure detected. For example, if the source/destination tables/columns get changed/dropped it will show as error. Whereas if you are accessing more columns than used to write to the destination object this will be flagged as a warning. To learn about validation click here.

Q24. Define design time validation versus run time validation.

Design time validation is performed when you are opening your package in BIDS whereas run time validation is performed when you are actually executing the package.

Design time validation is performed when you are opening your package in BIDS whereas run time validation is performed when you are actually executing the package.

Q25. Define early validation (package level validation) versus late validation (component level validation).

When a package is executed, the package goes through the validation process. All of the components/tasks of package are validated before actually starting the package execution. This is called early validation or package level validation. During execution of a package, SSIS validates the component/task again before executing that particular component/task. This is called late validation or component level validation.

Q26. What is DelayValidation and what is the significance?

As I said before, during early validation all of the components of the package are validated along with the package itself. If any of the component/task fails to validate, SSIS will not start the package execution. In most cases this is fine, but what if the second task is dependent on the first task? For example, say you are creating a table in the first task and referring to the same table in the second task? When early validation starts, it will not be able to validate the second task as the dependent table has not been created yet. Keep in mind that early validation is performed before the package execution starts. So what should we do in this case? How can we ensure the package is executed successfully and the logically flow of the package is correct? This is where you can use the DelayValidation property. In the above scenario you should set the DelayValidation property of the second task to TRUE in which case early validation i.e. package level validation is skipped for that task and that task would only be validated during late validation i.e. component level validation. Please note using the DelayValidation property you can only skip early validation for that specific task, there is no way to skip late or component level validation.

Q27. How would you do Logging in SSIS?

Logging Configuration provides an inbuilt feature which can log the detail of various events like onError, onWarning etc to the various options say a flat file, SqlServer table, XML or SQL Profiler.

Q28. How would you do Error Handling?

A SSIS package could mainly have two types of errors

a) Procedure Error: Can be handled in Control flow through the precedence control and redirecting the execution flow.

b) Data Error: is handled in DATA FLOW TASK buy redirecting the data flow using Error Output of a component.

Q29. How to pass property value at Run time? How do you implement Package Configuration?

A property value like connection string for a Connection Manager can be passed to the package using package configurations. Package Configuration provides different options like XML File, Environment Variables, SQL Server Table, Registry Value or Parent package variable.

Q30. How would you deploy a SSIS Package on production?

1. Create deployment utility by setting its property as true.

2. It will be created in the bin folder of the solution as soon as package is build.

3. Copy all the files in the utility and use manifest file to deploy it on the Prod.

Q31. How would you pass a variable value to Child Package?

Very frequent Question which looks so complicated to programmers.

Actually passing a variable value to a child package is very trivial task. We can pass on the value by configuring parent variable in package configuration but there is an easy way of achieve this and the fact lies beneath the fundamental principle of Variable Scope.

If you call a Child package then it is like a container itself and all the variables defined in above hierarchy will be accessible in the Child package.

Let me show this with an example in which I will declare a variable "ParentVar" in my parent package and call a Child package which will access "ParentVar" and display in a msgbox.

1) Parent: Create Parent Package and declare a variable "ParentVar"

2) Child: Create a Child package and use a script task and define readonly variable as ParentVar

3) Child: Now in Script you can use ParentVar like any other variable.eg I am using to display it in a msgbox. I would suggest to create another child package variable and assign Parent package variable value to it and use it in the child package variable through out the package.

4) Child: Whole Child package will look like

5) Parent: Now in parent package call the child package through Execute Package task.

The Parent package will look like

6) Result: Execute Parent package.it will in turn call child package and it will display msgbox

Here was a simple method to use parent package variable in Child package.

Q32. What are new features in SSIS 2008?

1). Improved Parallelism of Execution Trees:

The biggest performance improvement in the SSIS 2008 is incorporation of parallelism in the processing of execution tree. In SSIS 2005, each execution tree used a single thread whereas in SSIS 2008 , the Data flow engine is redesigned to utilize multiple threads and take advantage of dynamic scheduling to execute multiple components in parallel, including components within the same execution tree

2) Any .NET language for Scripting:

SSIS 2008 is incorporated with new Visual Studio Tool for Application(VSTA) scr

ipting engine. Advantage of VSTA is it enables user to use any .NET language for scripting.

3) New ADO.NET Source and Destination Component:

SSIS 2008 gets a new Source and Destination Component for ADO.NET Record sets.

4) Improved Lookup Transformation:

In SSIS 2008, the Lookup Transformation has faster cache loading and lookup operations. It has new caching options, including the ability for the reference dataset to use a cache file (.caw) accessed by the Cache Connection Manager. In addition same cache can be shared between multiple Lookup Transformations.

5) New Data Profiling Task:

SSIS 2008 has a new debugging aid Data Profiling Task that can help user analyze the data flows occurring in the package. In many cases, execution errors are caused by unexpected variations in the data that is being transferred. The Data Profiling Task can help users to discover the course of these errors by giving better visibility into the data flow.

6) New Connections Project Wizard:

One of the main usability enhancement to SSIS 2008 is the new Connections Project Wizard. The Connections Project Wizard guides user through the steps required to create source and destinations.

Q33. What are SSIS Connection Managers?

When we talk of integrating data, we are actually pulling data from different sources and writing it to a destination. But how do you get connected to the source and destination systems? This is where the connection managers come into the picture. Connection manager represent a connection to a system which includes data provider information, the server name, database name, authentication mechanism, etc.

Q34. What are a source and destination adapters?

A source adaptor basically indicates a source in Data Flow to pull data from. The source adapter uses a connection manager to connect to a source and along with it you can also specify the query method and query to pull data from the source.